Bio |

Google Scholar |

I am a Research Scientist at Google DeepMind, working on Gemini. Before DeepMind, I was a Research Scientist at Grammarly, where I developed robust and scalable approaches to enhance the quality of written communication, leveraging Natural Language Processing and Machine Learning. My research interests lie at the intersection of large language models and controllable text generation for text revision. I also co-organize the Workshop on Intelligent and Interactive Writing Assistants (In2Writing). I frequently collaborate with Dongyeop Kang and his amazing group at Minnesota NLP. Previously, I worked at x.ai on end-to-end Natural Language Understanding (NLU) for conversational AI scheduling assistance. I received my Masters from Columbia University advised by Prof. Luis Gravano and Prof. Tony Jebara. I got a dual-degree in Computer Science (Bachelors and Masters by Research) from IIIT Hyderabad advised by Prof. K. S. Rajan, where I worked on modeling spatio-temporally evolving phenomena, such as foodborne illnesses. |

| [2024] |

2 papers (mEdIT & ContraDoc) accepted to NAACL 2024! |

| [2024] |

2 papers (CoBBLER & Threads of Subtlety) accepted to ACL 2024! |

| [2024] |

Paper accepted at the PERSONALIZE Workshop at EACL 2024. |

| [2023] |

2 papers (CoEdIT & SpeakerlyTM) accepted to EMNLP 2023 (Findings and Industry Track)! |

| [2023] |

Upcoming Invited Talks at QCon SF, Data Science Salon SF, and NLP Summit 2023. |

| [2023] |

Talk at ConvAI Workshop at ACL 2023. |

| [2023] |

Paper accepted at the TrustNLP Workshop @ ACL 2023. |

| [2023] |

Co-organizing the In2Writing Workshop at CHI 2023. |

| [2023] |

Invited Talk at Bugout.dev meetup. |

| [2023] |

Talk and Panel Discussion at RE-WORK Summit, 2023. |

| [2022] |

Paper accepted at EMNLP 2022. |

| [2022] |

Invited Talk at Swiggy Bytes. |

| [2022] |

Best paper award at the In2Writing Workshop at ACL 2022. |

| [2022] |

Co-organizing the In2Writing Workshop at ACL 2022. |

| [2022] |

Paper accepted at ACL 2022. |

| [2021] |

Paper accepted at EACL 2021. |

| [2020] |

Paper accepted at EMNLP 2020. |

| Rickard Stureborg (Duke University) |

Writing with Large Language Models. |

| James Mooney (University of Minnesota) |

Efficient LLM Inference. |

| Ryan Koo (University of Minnesota) |

LLMs for Intelligent Writing Assistance. |

| Risako Owan (University of Minnesota) |

Human Preference Learning. |

| Bashar Alhafni (New York University) |

Personalized Text Generation. |

| Jierui Li (UT Austin) |

Document-level reasoning with LLMs. |

| Zae Myung Kim (University of Minnesota) |

Human-in-the-loop Iterative Text Revision. |

| Olexandr Yermilov (Ukrainian Catholic University) |

Privacy- and Utility-preserving NLP. |

| Wanyu Du (University of Virginia) |

Iterative Text Revision. |

Please refer to Google Scholar or Semantic Scholar for the most up-to-date list. |

2022 |

|

|

pdf |

abstract |

bibtex |

blog

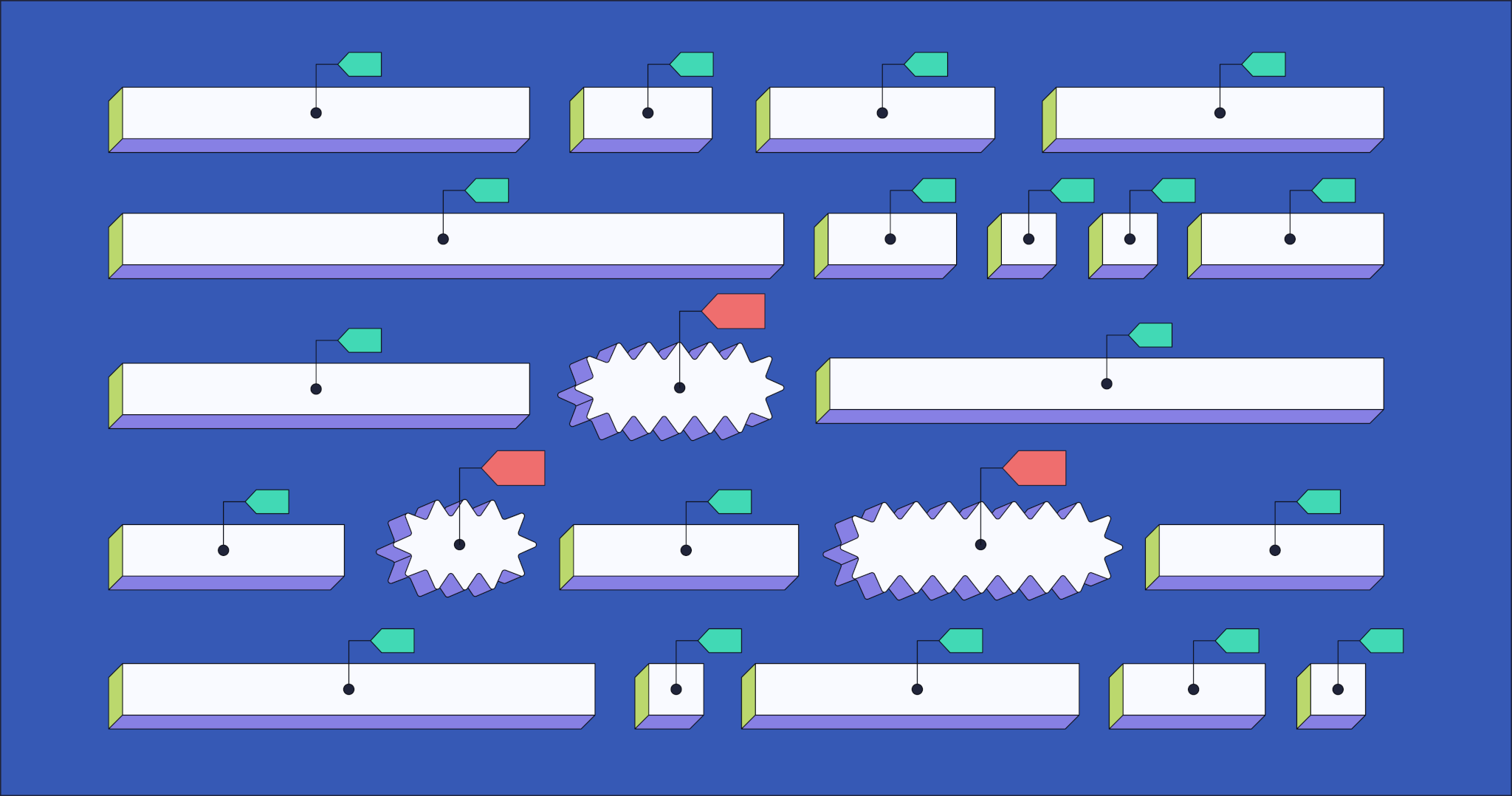

Iterative text revision improves text quality by fixing grammatical errors, rephrasing for better readability or contextual appropriateness, or reorganizing sentence structures throughout a document.Most recent research has focused on understanding and classifying different types of edits in the iterative revision process from human-written text instead of building accurate and robust systems for iterative text revision.In this work, we aim to build an end-to-end text revision system that can iteratively generate helpful edits by explicitly detecting editable spans (where-to-edit) with their corresponding edit intents and then instructing a revision model to revise the detected edit spans.Leveraging datasets from other related text editing NLP tasks, combined with the specification of editable spans, leads our system to more accurately model the process of iterative text refinement, as evidenced by empirical results and human evaluations.Our system significantly outperforms previous baselines on our text revision tasks and other standard text revision tasks, including grammatical error correction, text simplification, sentence fusion, and style transfer.Through extensive qualitative and quantitative analysis, we make vital connections between edit intentions and writing quality, and better computational modeling of iterative text revisions.

@inproceedings{kim-etal-2022-improving,

title = "Improving Iterative Text

Revision by Learning Where to

Edit from Other Revision Tasks",

author = "Kim, Zae Myung and

Du, Wanyu and

Raheja, Vipul and

Kumar, Dhruv and

Kang, Dongyeop",

booktitle = "Proceedings of the 2022

Conference on Empirical Methods in

Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi,

United Arab Emirates",

publisher = "Association for

Computational Linguistics",

url = "https://aclanthology.org/

2022.emnlp-main.678",

pages = "9986--9999",

}

|

|

pdf |

abstract |

bibtex |

video |

code

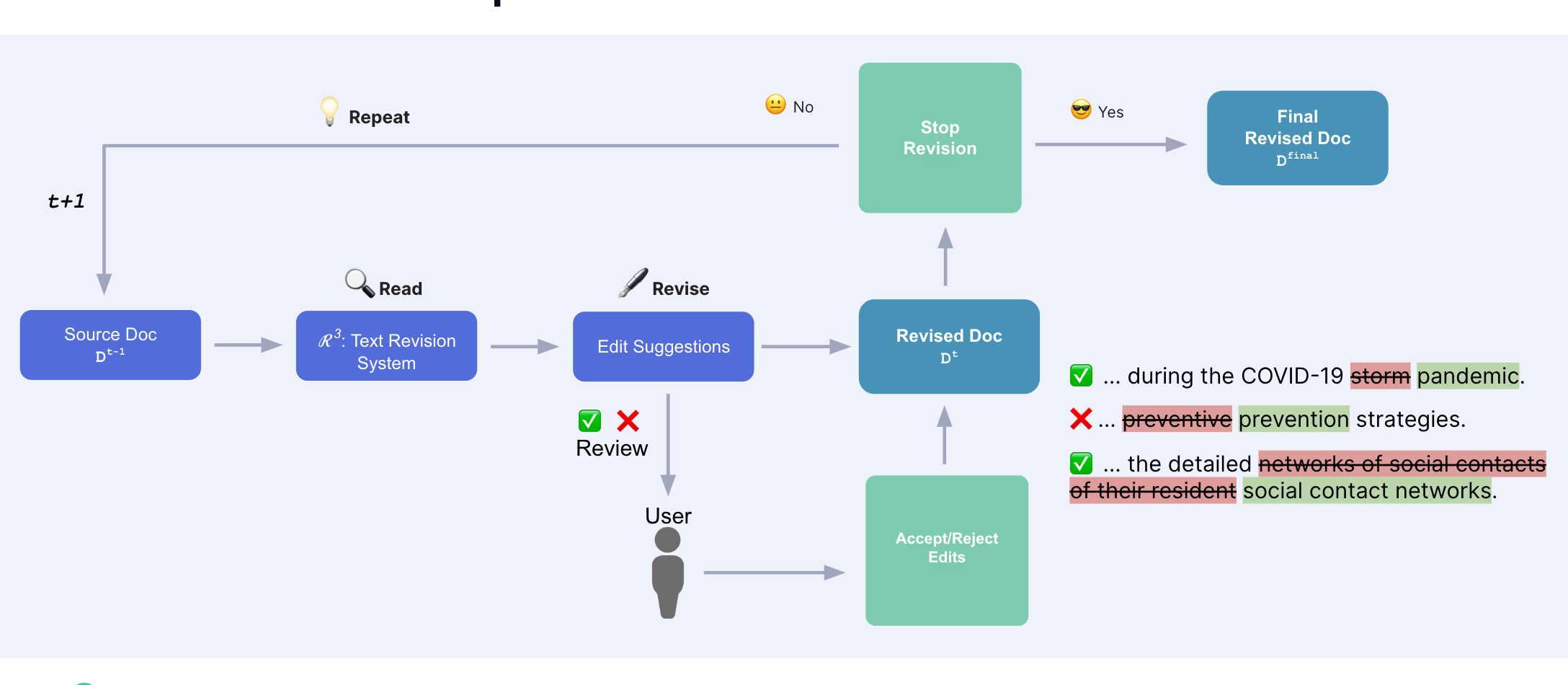

Revision is an essential part of the human writing process. It tends to be strategic, adaptive, and, more importantly, iterative in nature. Despite the success of large language models on text revision tasks, they are limited to non-iterative, one-shot revisions. Examining and evaluating the capability of large language models for making continuous revisions and collaborating with human writers is a critical step towards building effective writing assistants. In this work, we present a human-in-the-loop iterative text revision system, Read, Revise, Repeat (R3), which aims at achieving high quality text revisions with minimal human efforts by reading model-generated revisions and user feedbacks, revising documents, and repeating human-machine interactions. In R3, a text revision model provides text editing suggestions for human writers, who can accept or reject the suggested edits. The accepted edits are then incorporated into the model for the next iteration of document revision. Writers can therefore revise documents iteratively by interacting with the system and simply accepting/rejecting its suggested edits until the text revision model stops making further revisions or reaches a predefined maximum number of revisions. Empirical experiments show that R3 can generate revisions with comparable acceptance rate to human writers at early revision depths, and the human-machine interaction can get higher quality revisions with fewer iterations and edits. The collected human-model interaction dataset and code are available at https://github.com/vipulraheja/IteraTeR. Our system demonstration is available at https://youtu.be/lK08tIpEoaE.

@inproceedings{du-etal-2022-read,

title = "Read, Revise, Repeat: A

System Demonstration for

Human-in-the-loop Iterative

Text Revision",

author = "Du, Wanyu and

Kim, Zae Myung and

Raheja, Vipul and

Kumar, Dhruv and

Kang, Dongyeop",

booktitle = "Proceedings of the

First Workshop on Intelligent

and Interactive Writing

Assistants

(In2Writing 2022)",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for

Computational Linguistics",

url = "https://aclanthology.org/

2022.in2writing-1.14",

doi = "10.18653/v1/2022.in2writing-1.14",

pages = "96--108",

}

|

|

pdf |

abstract |

bibtex |

code |

blog

Writing is, by nature, a strategic, adaptive, and, more importantly, an iterative process. A crucial part of writing is editing and revising the text. Previous works on text revision have focused on defining edit intention taxonomies within a single domain or developing computational models with a single level of edit granularity, such as sentence-level edits, which differ from human’s revision cycles. This work describes IteraTeR: the first large-scale, multi-domain, edit-intention annotated corpus of iteratively revised text. In particular, IteraTeR is collected based on a new framework to comprehensively model the iterative text revisions that generalizes to a variety of domains, edit intentions, revision depths, and granularities. When we incorporate our annotated edit intentions, both generative and action-based text revision models significantly improve automatic evaluations. Through our work, we better understand the text revision process, making vital connections between edit intentions and writing quality, enabling the creation of diverse corpora to support computational modeling of iterative text revisions.

@inproceedings{du-etal-2022-

understanding-iterative,

title = "Understanding Iterative

Revision from Human-Written Text",

author = "Du, Wanyu and

Raheja, Vipul and

Kumar, Dhruv and

Kim, Zae Myung and

Lopez, Melissa and

Kang, Dongyeop",

booktitle = "Proceedings of the

60th Annual Meeting of the Association

for Computational Linguistics

(Volume 1: Long Papers)",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for

Computational Linguistics",

url = "https://aclanthology.org/

2022.acl-long.250",

doi = "10.18653/v1/2022.acl-long.250",

pages = "3573--3590",

}

|

2021 |

|

pdf |

abstract |

bibtex |

code |

blog

Edit-based approaches have recently shown promising results on multiple monolingual sequence transduction tasks. In contrast to conventional sequence-to-sequence (Seq2Seq) models, which learn to generate text from scratch as they are trained on parallel corpora, these methods have proven to be much more effective since they are able to learn to make fast and accurate transformations while leveraging powerful pre-trained language models. Inspired by these ideas, we present TST, a simple and efficient Text Simplification system based on sequence Tagging, leveraging pre-trained Transformer-based encoders. Our system makes simplistic data augmentations and tweaks in training and inference on a pre-existing system, which makes it less reliant on large amounts of parallel training data, provides more control over the outputs and enables faster inference speeds. Our best model achieves near state-of-the-art performance on benchmark test datasets for the task. Since it is fully non-autoregressive, it achieves faster inference speeds by over 11 times than the current state-of-the-art text simplification system.

@inproceedings{omelianchuk-etal-2021-text,

title = "{T}ext {S}implification by {T}agging",

author = "Omelianchuk, Kostiantyn and

Raheja, Vipul and

Skurzhanskyi, Oleksandr",

booktitle = "Proceedings of the 16th Workshop

on Innovative Use of NLP for Building

Educational Applications",

month = apr,

year = "2021",

address = "Online",

publisher = "Association for

Computational Linguistics",

url = "https://aclanthology.org/2021.bea-1.2",

pages = "11--25",

}

|

2020 |

|

pdf |

abstract |

bibtex |

blog

Recent works in Grammatical Error Correction (GEC) have leveraged the progress in Neural Machine Translation (NMT), to learn rewrites from parallel corpora of grammatically incorrect and corrected sentences, achieving state-of-the-art results. At the same time, Generative Adversarial Networks (GANs) have been successful in generating realistic texts across many different tasks by learning to directly minimize the difference between human-generated and synthetic text. In this work, we present an adversarial learning approach to GEC, using the generator-discriminator framework. The generator is a Transformer model, trained to produce grammatically correct sentences given grammatically incorrect ones. The discriminator is a sentence-pair classification model, trained to judge a given pair of grammatically incorrect-correct sentences on the quality of grammatical correction. We pre-train both the discriminator and the generator on parallel texts and then fine-tune them further using a policy gradient method that assigns high rewards to sentences which could be true corrections of the grammatically incorrect text. Experimental results on FCE, CoNLL-14, and BEA-19 datasets show that Adversarial-GEC can achieve competitive GEC quality compared to NMT-based baselines.

@inproceedings{raheja-alikaniotis

-2020-adversarial,

title = "{A}dversarial {G}rammatical

{E}rror {C}orrection",

author = "Raheja, Vipul and

Alikaniotis, Dimitris",

booktitle = "Findings of the

Association for Computational

Linguistics: EMNLP 2020",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for

Computational Linguistics",

url = "https://aclanthology.org/2020

.findings-emnlp.275",

doi = "10.18653/v1/2020.findings-

emnlp.275",

pages = "3075--3087",

}

|

2019 |

|

pdf |

abstract |

bibtex |

blog

Recent work on Grammatical Error Correction (GEC) has highlighted the importance of language modeling in that it is certainly possible to achieve good performance by comparing the probabilities of the proposed edits. At the same time, advancements in language modeling have managed to generate linguistic output, which is almost indistinguishable from that of human-generated text. In this paper, we up the ante by exploring the potential of more sophisticated language models in GEC and offer some key insights on their strengths and weaknesses. We show that, in line with recent results in other NLP tasks, Transformer architectures achieve consistently high performance and provide a competitive baseline for future machine learning models.

@inproceedings{alikaniotis-raheja-2019-unreasonable,

title = "The Unreasonable Effectiveness of

Transformer Language Models in

Grammatical Error Correction",

author = "Alikaniotis, Dimitris and

Raheja, Vipul",

booktitle = "Proceedings of the Fourteenth

Workshop on Innovative Use of NLP

for Building Educational Applications",

month = aug,

year = "2019",

address = "Florence, Italy",

publisher = "Association for

Computational Linguistics",

url = "https://aclanthology.org/W19-4412",

doi = "10.18653/v1/W19-4412",

pages = "127--133",

}

|

|

pdf |

abstract |

bibtex |

blog

Recent work in Dialogue Act classification has treated the task as a sequence labeling problem using hierarchical deep neural networks. We build on this prior work by leveraging the effectiveness of a context-aware self-attention mechanism coupled with a hierarchical recurrent neural network. We conduct extensive evaluations on standard Dialogue Act classification datasets and show significant improvement over state-of-the-art results on the Switchboard Dialogue Act (SwDA) Corpus. We also investigate the impact of different utterance-level representation learning methods and show that our method is effective at capturing utterance-level semantic text representations while maintaining high accuracy.

@inproceedings{raheja-tetreault-2019-dialogue,

title = "{D}ialogue {A}ct {C}lassification

with {C}ontext-{A}ware {S}elf-{A}ttention",

author = "Raheja, Vipul and

Tetreault, Joel",

book title = "Proceedings of the 2019

Conference of the North {A}merican Chapter

of the Association for Computational

Linguistics: Human Language Technologies,

Volume 1 (Long and Short Papers)",

month = jun,

year = "2019",

address = "Minneapolis, Minnesota",

publisher = "Association for Computational

Linguistics",

url = "https://aclanthology.org/N19-1373",

doi = "10.18653/v1/N19-1373",

pages = "3727--3733",

}

|

|

|

Modified version of template from here |